My friend Vadim Kulikov defended his PhD thesis in mathematics this Saturday past. I hadn’t written anything down, but had had some thoughts circling around my head as to what remark I could make at the post-party (this is quite a big deal in Finnish academia). I did speak, and although it went on for longer than is ideal (even though I didn’t say everything I might have), it was so well-received I decided to give as good an account of it here as my recollection permits. I have made some improvements and additions, but I might also have forgotten something, so remind me if you were there and noticed an omission.

I was inspired to say something by the last heading in the Introduction in Vadim’s thesis, which all math students here should definitely read. It’s very motivational, describing the sense of fulfillment at not only having achieved something worth achieving, but also at having gained a truly deep understanding of something, in this case certain mathematical objects and ideas.

I got to thinking about what it is that has made Vadim progress faster and achieve more than most of his peers. Some things are obvious and indispensable: natural talent, a strong ability to work and concentrate, a deep love of mathematics and understanding, and some luck in having a suitable advisor. I believe these are sufficient to make a great student of mathematics, but something more is required to make a mathematician.

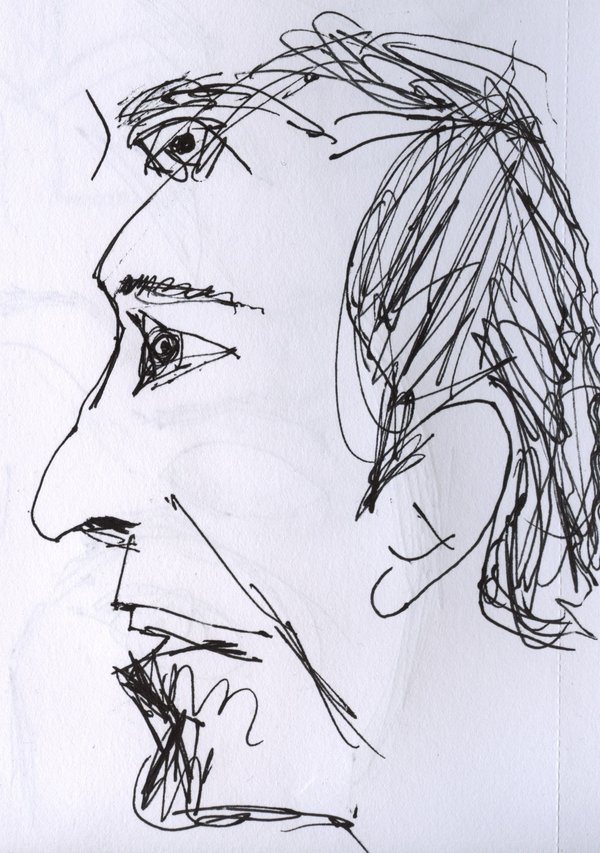

There is a concept in Zen buddhism, shoshin, meaning “beginner’s mind”. The saying is that “the beginner sees many things, the expert sees few things”. The beginner’s mind is empty and without preconceptions, so when the beginner encounters a thing, his mind is not filled with the few things he has been taught to think about it, but the totality of it.

For example, when appreciating a painting, the beginner sees a mass of brush strokes, a form, he might understand what the picture depicts – all in all, he is unguided and confused. As he gains knowledge, he starts to become an expert. He might identify the style of the painting and even the painter’s identity. He understands the use of various elements in the painting to signify ideas. He might know about the historical period in which the painting was created, and place the elements in that context. In brief, he gains the ability to see a painting, not be confused, have 5-10 thoughts about it and move on to the next one.

But at the highest levels of understanding, mastery, the Zen way is to have the beginner’s mind. The Zen master sees the mass of brush strokes. His mind is primed with every level of expertise, but it doesn’t force fixed ideas about what the painting is. It is full, yet empty.

There is actually some recognition of this in mathematics education at the university level. In many cases there is a simple way to solve an exercise if the student is aware of some higher-level theorem, without doing all the “boring” technical work you must do if you don’t know about the theorem. If a student presents such a solution, the lecturer will usually say “It’s nice that you know about this, but it would be better for you to do the problem without using this theorem, because it’s important to get to understand the internal details of these things.”

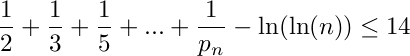

Vadim has a measure of this characteristic naturally, and I believe it is very valuable in doing creative work. One example of this is from when Vadim really had the beginner’s mind because he actually was a beginner. Vadim had come to our lukio (which the English might call a “sixth form college” and Americans might call “high school”) as a first year student, and I was a second year student. Vadim had already gotten enthusiastic about mathematics, but at his previous school there hadn’t been many other pupils with that interest, so he was happy to find a number of such people at our school. He was very eager to find problems to solve, so I told him to try to prove something during his next class; that every even number greater than two can be expressed as the sum of two prime numbers.

Well, as the mathematicians here have noticed, this is a famous open problem called Goldbach’s conjecture, so giving it to Vadim to solve was really just a practical joke on my part. “Someone’s too enthusiastic, let’s try to blunt his enthusiasm a bit.” Anyway, after the next class I asked Vadim how he’d gotten on and he said “I think I’ve almost solved it – I got the other direction, that when you add two primes you always get an even number.” I asked him, “What about 2 + 3?” “Oh, I forgot about that!”

When I revealed that the task was hopeless from the start, Vadim was not actually angry at me, or even all that deflated. To a beginner, all problems are open problems. Vadim even continued to think about the problem for some time, attacking it with whatever methods he knew about at that point.

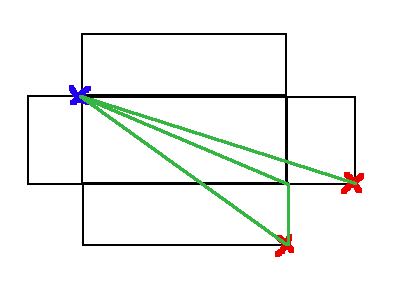

So with the beginner’s mind there comes a certain fearlessness about open problems and unknown things. Vadim kept this more or less intact during his studies. In mathematics it’s important to have the “complete simultaneous understanding” of the (Zen) master and the open, fearless mind of the beginner, because you have to be able to transplant ideas from one part of mathematics to another part, understanding the internal details well enough to know what needs to be changed and what doesn’t. If you “see many things” like a beginner, you can have surprising ideas.

However, once he had begun work as a graduate student with Tapani [Hyttinen] and Sy Friedman, Vadim began to tell me about certain frustrations he was experiencing. Working with experienced mathematicians in their domain of expertise, it would always be they who had significant flashes of insight. Vadim could redeem himself by working out technical details, but time and again it felt like open problems could only be solved by these “oracles”. Instead of many things he was beginning to see only one thing, “this is an open problem so I can’t solve it”. In this way, the joy of mathematics and the creative spirit of the beginner’s mind was beginning to suffer.

So it was a great relief to Vadim (and a great pleasure for me to hear) when he had a major breakthrough completely on his own, and produced an idea that resolved an open problem. The angst of the open problem was swept away, and he could once again look at things with fresh eyes. So I’m happy he has passed through expertise to mastery of this field, retained shoshin, and hope that he’s able to keep it in other parts of life as well, leading to a fruitful life of many creative discoveries.